In the last tutorial, we have implemented Simple Linear Regression to fitting the model. Today, we will learn about one of classification algorithm i.e Logistic Regression using Python. Actually, every machine learning algorithm works best under a given set of conditions. Making sure your algorithm fits the assumptions/ requirements ensures superior performance. You cannot use any algorithm in any condition. For example: We cannot use linear regression on a categorical dependent variable. Because we will not be appreciated for getting extremely low values of adjusted R2 and F statistic. Instead, in such situations, we should try using algorithms such as Logistic Regression, Desicion Trees, Support Vector Machine, Random Forest, etc.

Brief of Logistic Regression

Logistic Regression is one of the most popular ways to fit models for categorical data, especially for binary response data in Data Modeling. It is the most important (and probably most used) member of class of models called generalized linear models. Unlike linear regression, logistic regression can directly predict probabilities (values that are restricted to the 0(0,1) interval). Furthermore, those probabilities are well-caliberated when compared to the probabilities predicted by some other classifiers, such as Naive Bayes. Logistic regression preserves the marginal probabilities of the training data. The coefficients of the model also provide some hint of the relative importance of each input variable.

Logistic Regression is used when the dependent variable variable is categorical. For example: to predict wether the tumor is malignant (1) or not (0), to predict wether an email is spam (1) or not (0), etc.

Logistic Regression is generally used where the dependent variable is Binary or Dichotomous. That means the dependent variable can take only two possible values such as “Yes or No”, “Default or No Default”, “Living or Dead”, etc. Independent factors or variables an be categorical or numerical variables.

Let’s code!

In this tutorial we use data set of ‘Social_Network_Ads.csv’

#importing library

import numpy as np

import matplotlib.pyplot as plt

import pandas as pd

#importing dataset

dataset = pd.read_csv('Social_Network_Ads.csv')

So, just quick reminder, this dataset contains information of users in a social network, such as user id, gender, age, estimated salary, and purchased. Those social network has several business clients which can put their ads on social network. And one of their clients is a car company who has just launched their brand new luxury SUV for a ridiculous price. And we are trying to see which of these users of the social network are going to buy this brand new SUV. We are going to build a model that is going to predict if a user is going to buy or not that product based on two variable "age" and "estimated salary". We want to find some correlations between the age and the estimated salary of a user and his decision to purchase yes or no.

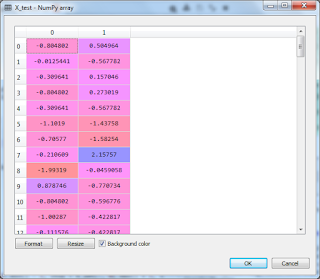

X = dataset.iloc[:, [2,3]].values y = dataset.iloc[:, 4].values #splitting dataset into training and testing set from sklearn.model_selection import train_test_split X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.25, random_state=0)

The y variable contains purchased column.

So, because the age variable and the salary variable do not have the same scale, this will cause some issue in your machine learning. Then, we need to do feature scaling.

#feature scaling from sklearn.preprocessing import StandardScaler sc_X = StandardScaler() X_train = sc_X.fit_transform(X_train) X_test = sc_X.transform(X_test)

#fitting logistic regression to the training set from sklearn.linear_model import LogisticRegression classifier = LogisticRegression(random_state=0) classifier.fit(X_train, y_train)So, our classifier learns the correlations between X_train and y_train. By learning those correlations, it will be able to predict our observations and to test its predictive power on a different set which is going to be the test set.

After fitting them, we are going to predict the test results.

#predicting the test set results y_pred = classifier.predict(X_test)So, let's create the confusion matrix to see the correct and incorrect prediction.

#making the confusion matrix from sklearn.metrics import confusion_matrix cm = confusion_matrix(y_test, y_pred)

Now, we are going to make a graph to see clearly the regions where our logistic regression model predicts "Yes" or "No".

#visualizing the training set results

from matplotlib.colors import ListedColormap

X_set, y_set = X_train, y_train

X1, X2 = np.meshgrid(np.arange(start = X_set[:, 0].min() - 1, stop = X_set[:, 0].max() + 1, step = 0.01),

np.arange(start = X_set[:, 1].min() - 1, stop = X_set[:, 1].max() + 1, step = 0.01))

plt.contourf(X1, X2, classifier.predict(np.array([X1.ravel(), X2.ravel()]).T).reshape(X1.shape),

alpha = 0.75, cmap = ListedColormap(('red', 'green')))

plt.xlim(X1.min(), X1.max())

plt.ylim(X2.min(), X2.max())

for i, j in enumerate(np.unique(y_set)):

plt.scatter(X_set[y_set == j, 0], X_set[y_set == j, 1],

c = ListedColormap(('red', 'green'))(i), label = j)

plt.title('Logistic Regression (Training set)')

plt.xlabel('Age')

plt.ylabel('Estimated Salary')

plt.legend()

plt.show()Then, we visualize the test set

#visualizing the test set results

from matplotlib.colors import ListedColormap

X_set, y_set = X_test, y_test

X1, X2 = np.meshgrid(np.arange(start = X_set[:, 0].min() - 1, stop = X_set[:, 0].max() + 1, step = 0.01),

np.arange(start = X_set[:, 1].min() - 1, stop = X_set[:, 1].max() + 1, step = 0.01))

plt.contourf(X1, X2, classifier.predict(np.array([X1.ravel(), X2.ravel()]).T).reshape(X1.shape),

alpha = 0.75, cmap = ListedColormap(('red', 'green')))

plt.xlim(X1.min(), X1.max())

plt.ylim(X2.min(), X2.max())

for i, j in enumerate(np.unique(y_set)):

plt.scatter(X_set[y_set == j, 0], X_set[y_set == j, 1],

c = ListedColormap(('red', 'green'))(i), label = j)

plt.title('Logistic Regression (Test set)')

plt.xlabel('Age')

plt.ylabel('Estimated Salary')

plt.legend()

plt.show()